LeCun, Bengio, Hinton - Deep Learning

LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. “Deep Learning.” Nature 521, no. 7553 (May 2015): 436–44. https://doi.org/10.1038/nature14539.

Summary

Deep learning uses multiple layers to learn representations of data with multiple levels of abstraction

Supervised Learning

-

most common

-

show inputs and annotations

-

create an objective function that measures errors

-

most people use stochastic gradient descent

-

known since 1960 that linear classifers only carve their input space into very simple regions (half spaces separated by a hyperplane)

-

multiple non-linear layers from 5-20, system can implement extremely intricate functions

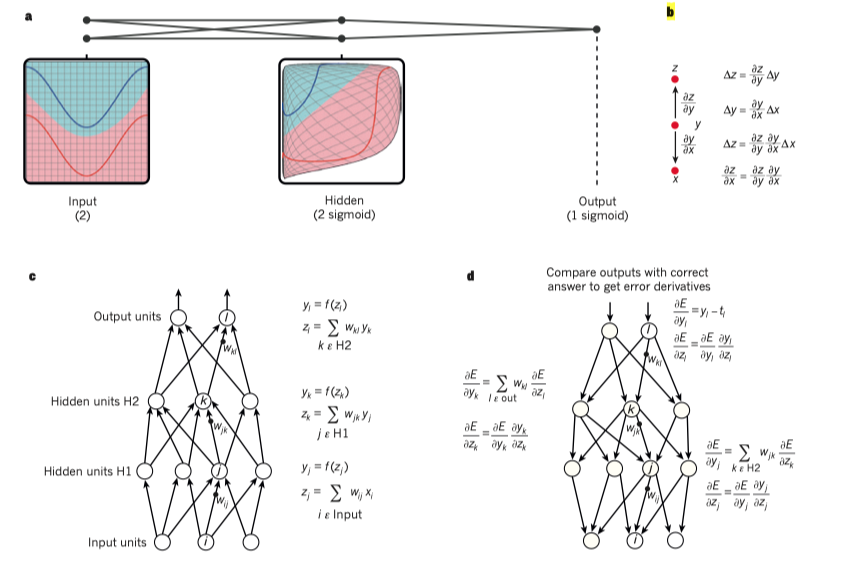

Backprops for multilayer

- the derivative of the objective with respect to the input of a module can be computed by working backwards from the gardient wrt to the output of that module

- ReLu maps fixed-size input to fixed-size output

- late 1990’s commonly thought that simple gradient descent would get caught in local minima

- rarely a problem for gradient descent in practice